How Do We Maintain Academic Integrity in the ChatGPT Era?

(Hint: It’s really no different from what we should have been doing all along)

(Hint: It’s really no different from what we should have been doing all along)

As antisemitism and other forms of bigotry surge, higher ed searches for a path forward

Authoritarianism, attacks on DEI, and the weaponization of antisemitism

What has been your most rewarding moment as an educator?

Individual interventions can empower at-risk students to succeed

A college student searches for a personalized queer identity

Academic institutions must change their approach to libraries and librarians

Architecture can foster and strengthen a sense of belonging on campus

The 2023 Delphi Award winners are improving the working conditions of non-tenure-track, contingent, and/or adjunct faculty

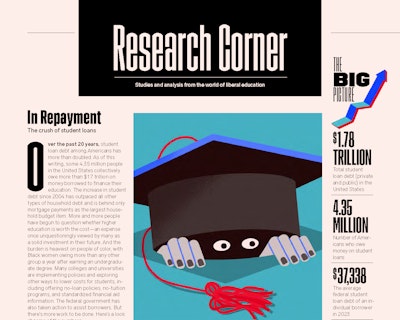

The crush of student loans

A conversation with historian Annette Gordon-Reed on why history is ground zero in the culture wars of today

How hip-hop culture can get students excited about chemistry