Why STEM Students Need to Learn Design Refusal

A rejection of the default mode is, ideally, an invitation to something more equitable

No one had ever told me that saying ‘no’ was a valid conclusion.”

Shreya Chowdhary, Olin College of Engineering class of 2022, was giving me the postmortem on an aborted community engagement project. With a team of other Olin undergraduate students, Chowdhary had agreed to partner with a nonprofit organization to combat human trafficking. The team planned to build a web-scraping tool that could quickly and automatically harvest online data and, the students hoped, identify and protect trafficking victims.

The students began by studying research on data security but uncovered some thorny issues. Certain efforts to curb human trafficking—such as the Stop Enabling Sex Traffickers Act and Fight Online Sex Trafficking Act—had unintentionally harmed voluntary sex workers by making it more difficult—and thus, more dangerous—for them to find and vet clients.

The Olin students realized that, regardless of their precautions, the tool they were designing could be used to target innocent or vulnerable groups, including voluntary sex workers and the trafficking victims the students were aiming to protect. The students would have no say over whom their nonprofit partner targeted, how the partner used the data, or whether the partner shared data externally (such as with police). The students were also concerned that the partner was focused solely on “extraction”—helping victims escape the immediate dangers of trafficking—with no plans for supporting victims after the intervention. After thoroughly examining the problem and their own values, the students refused to build the tool.

Their refusal floored me. In my own decade-long engineering training, I, like Chowdhary, had never been told that saying “no” was a valid conclusion.

Unless you’ve gone through it, the experience of earning an engineering degree can be hard to understand. Imagine that all through your undergraduate and graduate training, you are handed a steady stream of increasingly hard puzzles. All of your classes introduce new tricks to solve these puzzles, each with a correct answer and an accepted solution. You develop a professional identity as someone who is very effective at solving puzzles—no matter how challenging, no matter how morally tricky. Failing to solve a puzzle feels like a personal failure. This enthusiasm for problem-solving, though, needs to be balanced with a critical perspective that draws from social sciences and the liberal arts to focus on the humans who will be affected by our technology. Through conversations with her classmates and the project’s advisor, Erhardt Graeff, assistant professor of social and computer science at Olin College, Chowdhary learned to step outside the default engineering mindset that focuses on things rather than people.

“We had taken it as a given that scraping data is OK with a purpose,” Chowdhary says. “I hadn’t thought of the [trafficking] victims as people but just as victims. It’s a dehumanizing way of viewing people.”

To avoid building tools that do more harm than good, science, technology, engineering, and math (STEM) educators need to expand our pedagogies and teach that saying “no” is a valid option. We need to teach design refusal.

To avoid building tools that do more harm than good, STEM educators need to expand our pedagogies and teach that saying “no” is a valid option.

High-profile commercial success stories in big tech, including for companies like Facebook and Amazon, have demonstrated the potential of machine learning (ML) and artificial intelligence (AI) technologies to automate repetitive tasks and uncover valuable insights from large data sets. Unfortunately, the use of these technologies so far has been largely driven by profit. As Mike Baiocchi, an assistant professor of epidemiology at Stanford University who helps run the Data Science for Social Good fellowship, bluntly puts it, “Industry is using ML in ways that are capitalist wet dreams.”

Software engineers can furtively gather troves of data to build voter profiles (as in Facebook’s Cambridge Analytica scandal) or can deploy communication platforms that support misinformation and encourage genocide (as in the Myanmar military’s use of Facebook as “a tool for ethnic cleansing,” according to the New York Times).

“I believe companies deliberately keep certain design decisions at the lower level because it protects them legally,” Graeff says. By siloing critical decisions in the hands of engineers, upper management can wash their hands of scandals and catastrophes, claiming the technical team simply didn’t consider those factors.

Leaving key decisions in the hands of technical teams, however, all but guarantees that important social factors will go unconsidered. Researcher Erin A. Cech has shown that engineering students’ belief in the importance of public welfare tends to decline during their engineering training. Cech attributes these trends to a “culture of disengagement” in engineering that frames nontechnical concerns as irrelevant and devalues social competencies. This culture shows the real need for projects that energize STEM students to do social good. At Olin College, Chowdhary was initially drawn to the web-scraping project for precisely this focus, noting that the project seemed to contain “that ideal phrase, ‘tech for good,’ that makes you feel so good,” she says.

Baiocchi’s work at Stanford is one of an increasing number of efforts nationwide to introduce the liberal arts—particularly with a focus on effects on humans—into technology. Baiocchi partners with the Brazilian government as a data science consultant in an effort to help stop sexual abuse and human trafficking. Unlike the nonprofit the Olin students had partnered with, the Brazilian government has both preventative and supportive programs in place to protect and aid victims. Further, the data Baiocchi helps gather describe and identify multi-national corporations with corrupt supply chains that are involved with human trafficking, rather than individual victims. Still, Baiocchi recognizes the potential for harm. “We’re not naive—we don’t think this group will never exploit us,” he says. “It’s a tough calculation.”

Refusal to design is not always the best response, even in these morally gray situations. As engineers, our professional responsibility is to use our training to solve problems and thereby do good in the world. A complete halt at the barest hint of unintended consequences would leave engineers ineffective and the users of our technologies powerless. It seems like the easy way out. But this is a straw-man argument: a refusal can be more than just a halt where engineers throw up their hands and elect not to advance. A refusal can instead be a clear statement that “we’re not going to engage with the default mode” of doing things, says Chelsea Barabas, a PhD student at the Massachusetts Institute of Technology. In her experience, design refusal can be an invitation to a different kind of interaction with partners and users.

Barabas’s first experience with refusal was on the receiving end. Some of her research focuses on Correctional Offender Management Profiling for Alternative Sanctions (COMPAS), a risk-assessment algorithm used to predict how likely it is that a criminal justice defendant will commit another crime. In several states, judges use defendants’ COMPAS scores during sentencing, even though results from the algorithm show “significant racial disparities,” falsely flagging “black defendants as future criminals . . . at almost twice the rate as white defendants,” according to a 2016 study conducted by the investigative journalism nonprofit ProPublica.

Barabas felt that other researchers were too focused on machine bias, ignoring other ways that technology and policy affect minoritized communities in the criminal justice system, and she sought a broader perspective. But she experienced refusal when she approached community partners with ideas for future research.

“Our community partners told us, ‘We don’t want you to do more fancy work on de-biasing,’” Barabas says. Though the ProPublica study resulted in a flurry of academic research articles, it led to few positive changes for those targeted by COMPAS. Barabas’s community partners refused to be just another set of research subjects. Instead, the partners urged her to examine the hierarchy of power—to focus on studying and changing judges’ behavior. Facing refusal encouraged Barabas to reorient her perspective and pursue a different line of research.

To explain this change in orientation, Barabas draws an analogy to the field of anthropology. In the 1970s, anthropologists realized that the knowledge they produced about oppressed populations often supported colonial efforts. In response, some anthropologists began to refuse long-standing disciplinary conventions that centered on the study of non-Western, marginalized peoples. Instead, these anthropologists started studying those holding power and their systems of oppression. Anthropologists such as Laura Nader called this “studying up”—examining the cultures of the powerful, particularly as a way to revitalize democracy. “We tend to center the problem on the people bearing the brunt of the trauma,” Barabas says. “In doing that, we hold up the system that causes the problem.”

In her research on machine learning, Barabas views algorithms as technologies of power and “a modality of governance.” This reframing of her research was sparked by that initial refusal from the community partner. Now, she views refusal not as an end but as a starting point. A rejection of the default mode is, ideally, an invitation to something more equitable. “It’s generative,” Barabas says.

Liberal arts–infused engineering programs such as Olin’s can incorporate more context and ethics into their curriculum, broadening engineering students’ conception of what they should consider in their approaches to engineering challenges. “If we’re going to get serious about refusal as an essential design practice,” Barabas wrote in OneZero in 2020, “we’ll need to attend to the affective dimensions of engineering, to the ways that desires for productivity, scale, and impact shape our motivations to do certain kinds of work and ask certain kinds of questions.”

Barabas’s research on using the COMPAS algorithm for pretrial risk assessment is one example of why STEM students need to be taught that it’s OK to resist accepting the technical questions posed by powerful or monied interests. Rather than asking how we can more accurately assess pretrial risk, engineering students need to have the sociological awareness to question whether they ought to provide technical support for pretrial detention in the first place.

Despite a renewed focus in some engineering programs on ethics and the effects on humans, design refusal is a bitter pill to swallow for many students—and their faculty. Refusal goes against norms in industry and academia, and the last thing educators want is for students to get fired from future jobs for refusing to design for their employers. It’s important, however, to show our students, who may find refusing a project unpalatable or difficult as an individual, that collective action is often the basis for lasting change. In Chowdhary’s case, she did not refuse alone—the entire student team at Olin College rejected the project. In 2018, Google employees successfully protested a secretive Pentagon contract, according to the New York Times, and Axon—the manufacturer of the Taser—created an AI ethics board when company executives realized that they “wouldn’t be able to hire the best engineers” without such oversight, according to the New Yorker.

This movement toward teaching design refusal is still nascent. Chowdhary, Barabas, and Graeff are working to create new frameworks for refusal in response to data-intensive technologies. Some scholars, like Chowdhary, arrive at this work through their own refusal, leading them to question how engineering ought to be practiced. Others, like Barabas, find their experience with refusal to be directly generative, leading to new lines of inquiry. And faculty like Graeff are working to implement specific lesson plans that introduce STEM students to the concept of design refusal. The rest of us working in STEM undergraduate education—particularly those with an appreciation for and training in the liberal arts—should embrace the opportunity and the pedagogy to help young STEM professionals see the gravity of their decisions and the power of design refusal not as an end but as a beginning.

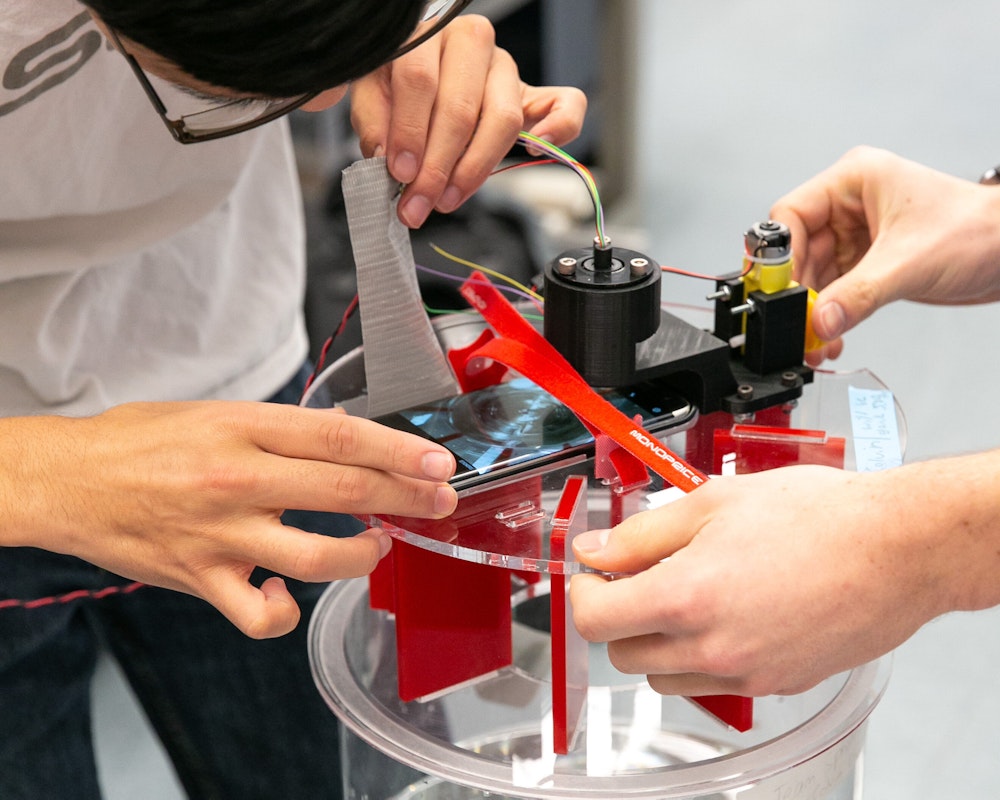

Images credit: Olin College of Engineering